Classifying data efficiently is a cornerstone of modern algorithms. When two groups can be divided by a straight line or plane, we call this property linear separability. This fundamental idea shapes how models learn patterns and make predictions.

Many real-world problems rely on this concept. From spam filters to medical diagnoses, the ability to draw a clear decision boundary affects accuracy. Simple classifiers like logistic regression excel with linearly separable datasets.

Neural networks and support vector machines also depend on this principle. Recognizing whether a problem requires linear or non-linear solutions saves time and resources. This knowledge helps data scientists choose the right tools for each task.

Throughout this guide, we’ll explore verification methods, mathematical foundations, and practical applications. Understanding these core concepts opens doors to more advanced techniques in artificial intelligence.

What Is Linear Separability in Machine Learning?

Imagine slicing an apple cleanly in half with one swift motion. This visual represents how algorithms divide distinct groups in classification tasks. The mathematical foundation traces back to Frank Rosenblatt’s 1957 perceptron, demonstrating how weight vectors and bias terms create dividing lines.

Defining the Dividing Line

A hyperplane acts as the mathematical knife-edge separating classes. In two dimensions, it appears as a straight line defined by:

w₁x₁ + w₂x₂ + b = 0

Higher dimensions extend this concept to planes and beyond. The bias term (b) determines the boundary’s offset from the origin, while weights (w) control its orientation.

Why Separation Matters

Efficient classification depends on clear divisions between data clusters. Consider these key impacts:

| Scenario | Model Performance | Training Efficiency |

|---|---|---|

| Linearly separable | High accuracy | Fast convergence |

| Non-linear data | Requires transformations | Increased complexity |

Email spam filters showcase this principle effectively. The system learns to draw boundaries between legitimate messages and unwanted content based on word frequencies and patterns.

Modern applications extend beyond simple binary cases. Multi-class problems use multiple hyperplanes in n-dimensional space, while kernel methods handle complex separations through intelligent transformations.

Mathematical Foundations of Linear Separability

Vector spaces transform raw data into divisible territories through mathematical boundaries. These foundations enable algorithms to construct precise separations between distinct classes. The geometry behind this process combines algebra with spatial reasoning.

Hyperplanes and Decision Boundaries

A hyperplane serves as the fundamental separator in n-dimensional space. In 2D, it appears as a line; in 3D, a flat plane. The equation governing this boundary follows a consistent form:

w·x + b = 0

Key properties include:

- Normal vector (w) determines orientation

- Bias term (b) controls offset from origin

- Margin width affects classification confidence

Linear Algebra Formulation

Weight vectors and bias terms create the separation mechanism. Consider these components:

| Element | Role | Impact |

|---|---|---|

| Weight vector | Defines boundary angle | Controls feature importance |

| Bias term | Shifts position | Adjusts for class imbalance |

The optimization process involves:

- Calculating dot products between vectors

- Minimizing classification errors

- Maximizing margin width

Logical AND Gate Example

This classic problem demonstrates manual weight calculation. The truth table shows when outputs equal 1:

(0,0)→0, (0,1)→0, (1,0)→0, (1,1)→1

A viable solution uses:

- Weights: [1, 1]

- Bias: -1.5

- Activation function: Step at 0

The resulting decision boundary perfectly separates true cases from false ones. This example illustrates how simple models can solve linearly separable problems.

How to Check for Linear Separability

Multiple approaches exist to verify if a dataset meets separation criteria. Choosing the right method depends on data complexity and available resources. These techniques range from simple visual checks to advanced mathematical proofs.

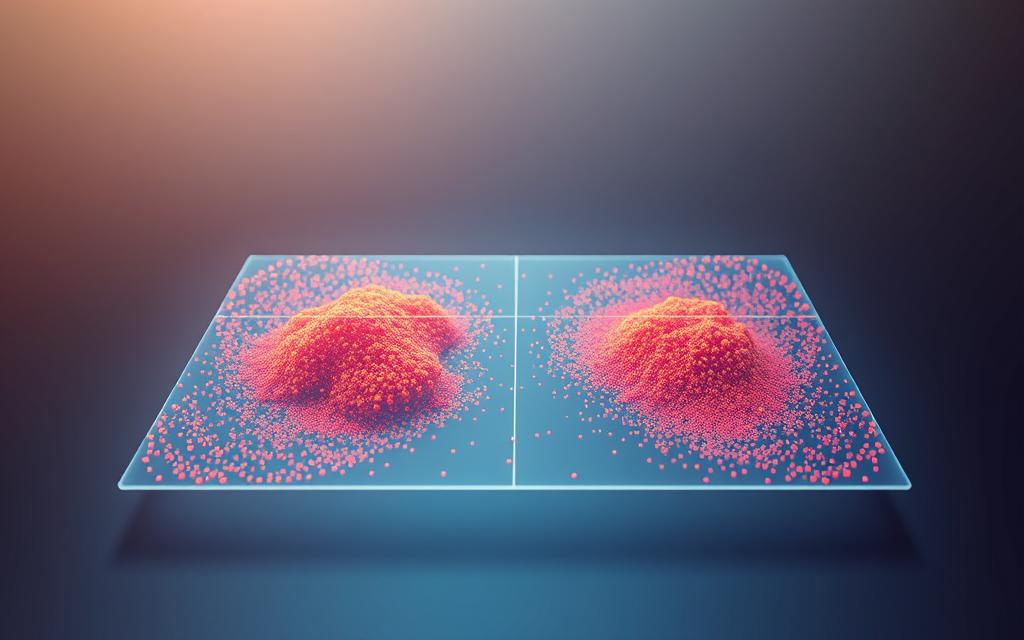

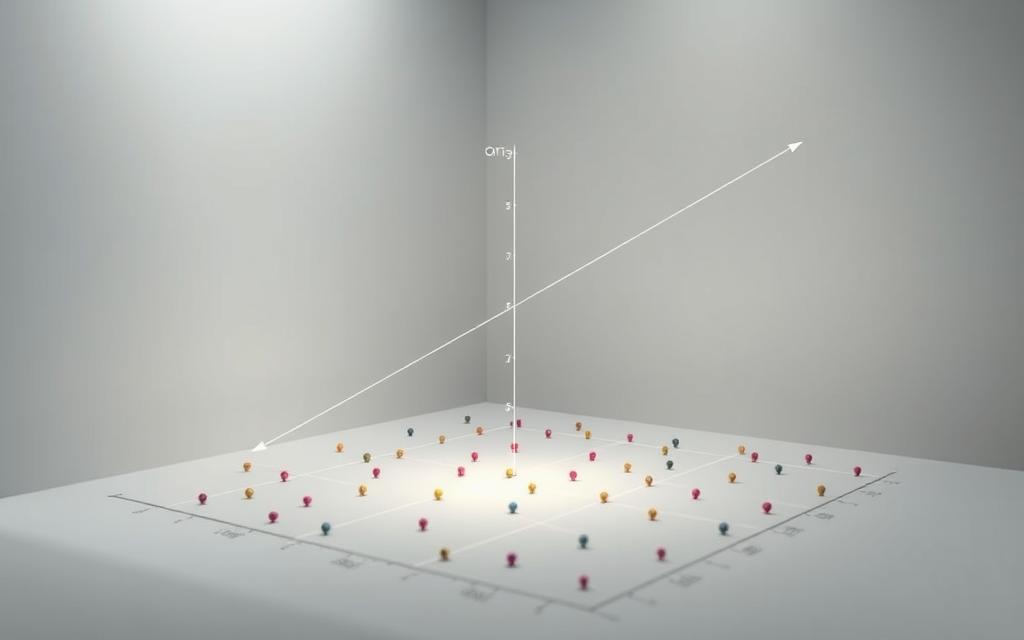

Visual Inspection (2D/3D Plots)

The simplest approach works for low-dimensional data. Scatter plots reveal clear patterns when:

- Features number two or three

- Clusters show distinct grouping

- No significant overlap exists

Python’s matplotlib makes this straightforward:

import matplotlib.pyplot as plt

plt.scatter(X[:,0], X[:,1], c=y)

plt.show()

Perceptron Learning Algorithm

This classic method tests separability through iterative adjustments. The algorithm proves convergence when:

- Weights update until zero errors occur

- Margin between classes remains positive

- Learning rate stays within bounds

The convergence theorem guarantees success for data linearly separable cases. Training stops when perfect classification occurs.

Support Vector Machines

SVMs provide robust verification through margin optimization. Key aspects include:

| Approach | Advantage | Limitation |

|---|---|---|

| Hard margin | Exact separation | No noise tolerance |

| Soft margin | Handles outliers | Parameter tuning needed |

The quadratic programming formulation maximizes the gap between classes. This makes support vector machines ideal for verification.

Kernel Methods

Complex datasets require advanced transformations. Kernel tricks enable separation by:

- Mapping to higher dimensions

- Using polynomial or RBF functions

- Maintaining computational efficiency

Scikit-learn implements this seamlessly:

from sklearn.svm import SVC

model = SVC(kernel=’rbf’)

model.fit(X_train, y_train)

These methods extend verification capabilities beyond linear cases. They’re essential for modern learning systems.

Transforming Non-Linear Data for Linear Separability

Complex datasets often require creative reshaping to reveal hidden patterns. When raw features don’t form clear divisions, strategic transformations can project them into separable spaces. These techniques empower simpler models to handle intricate relationships.

Polynomial Feature Expansion

Adding polynomial terms creates curved decision boundaries in higher dimensions. A quadratic expansion might convert:

x → [x, x²]

Key considerations include:

- Degree selection balances flexibility vs overfitting

- Computational cost grows exponentially

- Feature scaling becomes critical

Scikit-learn’s PolynomialFeatures automates this process. Higher degrees capture more complex shapes but risk memorizing noise.

Kernel Tricks in SVMs

Support vector machines bypass explicit transformations using kernel functions. These compute dot products in expanded space without storing coordinates.

| Kernel | Formula | Best For |

|---|---|---|

| RBF | exp(-γ||x-y||²) | Complex clusters |

| Polynomial | (x·y + c)^d | Periodic patterns |

The Radial Basis Function kernel excels at handling circular clusters. Its γ parameter controls flexibility.

Neural Networks and Manifold Learning

Neural networks automatically learn hierarchical transformations through hidden layers. Each stage gradually untangles the input space.

Autoencoders demonstrate this well:

- Compress input into lower dimensions

- Reconstruct original data

- Learn efficient representations

The manifold hypothesis suggests most real-world data lives near lower-dimensional surfaces. Deep learning models exploit this property.

Python Example: Kernel SVM Implementation

This complete workflow uses make_circles dataset:

from sklearn.svm import SVC

from sklearn.datasets import make_circles

X, y = make_circles(n_samples=100, noise=0.1)

model = SVC(kernel=’rbf’, gamma=1)

model.fit(X, y)

accuracy = model.score(X, y)

Key takeaways:

- RBF kernel handles non-linear separation

- Gamma controls decision boundary flexibility

- No explicit transformation needed

Visualizing results with matplotlib confirms the circular decision boundary.

Conclusion

Modern algorithms thrive when data clusters form distinct patterns. The ability to identify clear decision boundaries remains fundamental across industries, from healthcare diagnostics to fraud detection systems.

Verification methods like perceptrons and kernel SVMs solve real-world machine learning problems efficiently. In cases requiring complex separations, the Perceptron algorithm demonstrates how simple models can achieve remarkable results.

Emerging techniques now push beyond traditional approaches. Edge computing enables real-time classification, while transformer architectures handle high-dimensional data problems. These advancements create new opportunities for businesses.

Start experimenting with separation concepts today. Practical implementation delivers immediate value across prediction tasks and pattern recognition systems.

FAQ

Why is linear separability important in classification problems?

Linear separability simplifies classification by allowing a straight line or hyperplane to distinguish between classes. Models like SVMs and perceptrons perform efficiently when data is linearly separable, reducing computational complexity.

How can I check if my dataset is linearly separable?

Use visual inspection for 2D/3D plots, apply the Perceptron Learning Algorithm, or leverage Support Vector Machines. If the Perceptron converges without errors, the data is likely separable.

What role do hyperplanes play in linear separability?

A hyperplane acts as a decision boundary in high-dimensional space. It divides data points of different classes using weights and bias terms, forming the foundation for classifiers like SVMs.

Can non-linear data be made linearly separable?

Yes, techniques like polynomial feature expansion, kernel tricks, or neural networks transform non-linear data into a higher-dimensional space where separation becomes possible.

How does the kernel trick improve linear separability?

Kernels map data to a higher-dimensional space without explicit computation, enabling SVMs to find optimal separation. Common kernels include RBF and polynomial functions.

What’s an example of a linearly separable problem?

The logical AND gate is a classic case. Its inputs (0,0), (0,1), (1,0), and (1,1) can be split by a straight line, making it linearly separable.