Modern businesses rely on advanced data analysis to stay competitive. Machine learning plays a crucial role in processing vast amounts of information efficiently. From healthcare to finance, industries leverage algorithms to uncover insights and automate decisions.

Key components include frameworks like TensorFlow and PyTorch, which simplify model development. Cloud platforms, such as Google Cloud’s Vertex AI, offer accessible experimentation with a $300 credit for new users. These tools enable faster deployment and scalability.

Emerging trends, like quantum computing integration, push boundaries further. As data volumes grow, efficient infrastructure becomes essential. This section explores the core technologies driving innovation in the field.

Introduction to Machine Learning

Businesses now harness self-improving systems to unlock hidden patterns in massive datasets. This capability stems from machine learning, a subset of artificial intelligence that enables algorithms to learn autonomously. Unlike traditional programming, these systems adapt without explicit instructions.

Consider the scale: Google Cloud reports 2.5 quintillion bytes of data processed daily. Traditional methods struggle here. Machine learning thrives, identifying trends humans might miss.

IBM breaks the process into three parts:

- Decision Process: Algorithms make predictions.

- Error Function: Measures accuracy.

- Optimization: Adjusts models for better results.

Industries already benefit. Deloitte found 67% of companies use these tools. Examples include:

- Fraud detection in banking.

- Personalized recommendations in e-commerce.

“Every industry will transform through machine learning adoption.”

As data grows, so does reliance on these systems. The future hinges on smarter, faster algorithms.

Understanding Machine Learning

Pattern recognition drives modern decision-making processes. At its core, machine learning enables systems to improve through experience. Three components power this capability:

- Input Data: Raw information fed into algorithms.

- Model Training: Systems learn patterns from data.

- Prediction Output: Delivers actionable insights.

Definition and Core Concepts

Artificial intelligence encompasses broad human-like reasoning. Machine learning, a subset, focuses on data-driven predictions. Deep learning takes this further with multi-layered neural networks.

IBM defines these networks as having input, hidden, and output layers. Weighted connections between layers refine accuracy. For example, Google Search processes queries 200% faster than manual analysis.

How It Differs from AI and Deep Learning

While AI mimics cognition, ML identifies patterns autonomously. Deep learning specializes in complex tasks like image recognition. Think of AI as the umbrella, ML as the tool, and DL as the advanced technique.

“Effective ML systems balance accuracy with computational efficiency.”

MIT’s framework evaluates models based on speed, scalability, and error rates. This ensures optimal performance across industries.

Key Machine Learning Algorithms

Behind every smart system lies a carefully chosen algorithm. These computational methods transform raw data into actionable insights. Three primary categories dominate the field: supervised, unsupervised, and reinforcement learning algorithms.

Supervised Learning Algorithms

Labeled datasets train models to predict outcomes accurately. Common examples include:

- Linear Regression: Predicts numerical values (e.g., housing prices).

- Decision Trees: Classifies data using branching logic.

- Support Vector Machines (SVM): Ideal for image recognition tasks.

These machine learning algorithms excel when historical data guides future predictions.

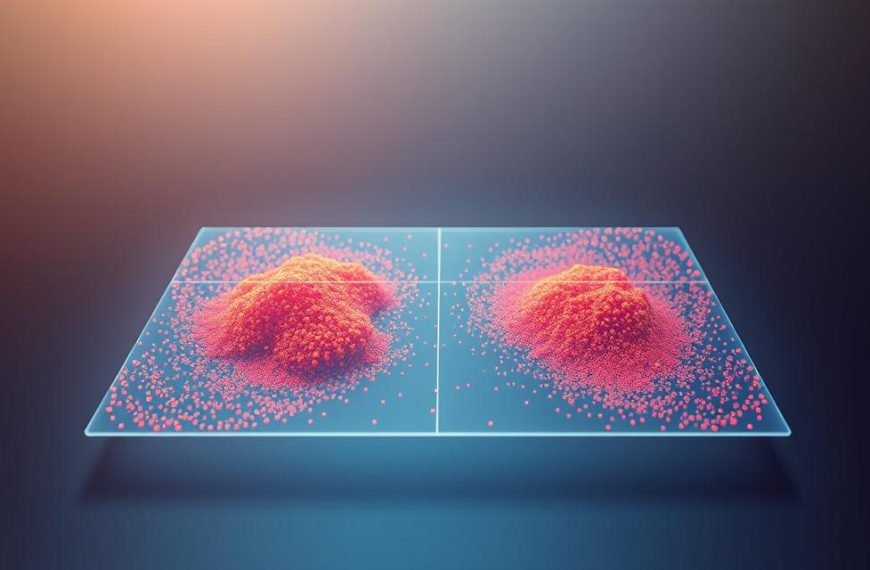

Unsupervised Learning Algorithms

Unlabeled data reveals hidden patterns through clustering and dimensionality reduction. Popular techniques:

- K-means Clustering: Groups customers by purchase behavior.

- Principal Component Analysis (PCA): Simplifies complex datasets.

Retailers use these methods for market segmentation and anomaly detection.

Reinforcement Learning Algorithms

Trial-and-error learning powers systems like Google’s AlphaGo. This reinforcement learning approach rewards successful actions, optimizing strategies over time. Applications span robotics, gaming, and logistics.

Semi-supervised learning blends both approaches, leveraging partial labels for cost-effective training. Hybrid models thrive in scenarios with limited annotated data.

Popular Machine Learning Models

Cutting-edge algorithms power today’s intelligent systems. Selecting the right machine learning models depends on data complexity and business goals. This section explores architectures driving industries forward.

Neural Networks

Neural networks mimic the human brain to process visual or auditory data. Layers of interconnected nodes refine patterns—ideal for tasks like facial recognition. For instance, Facebook’s DeepFace achieves 97% accuracy by analyzing pixel relationships.

Decision Trees and Random Forests

Decision trees split data into branches for transparent classifications. Random forests boost accuracy by combining hundreds of trees. Below compares their strengths:

| Feature | Decision Trees | Random Forests |

|---|---|---|

| Interpretability | High | Low |

| Accuracy | Moderate | High |

| Use Case | Credit scoring | Medical diagnoses |

Linear and Logistic Regression

Linear regression predicts continuous values, like home prices based on square footage. Logistic regression classifies binary outcomes—Gmail filters spam with 99% precision using this method.

Choosing between learning models hinges on trade-offs:

- Precision: Neural networks for complex tasks.

- Transparency: Decision trees for regulatory compliance.

“The best model balances computational cost with business impact.”

Essential Tools for Machine Learning

Efficient development in data science requires specialized programming languages and frameworks. The right tools streamline workflows, from prototyping to deployment. Over 57% of data scientists prefer Python for its versatility, per Kaggle’s 2021 survey.

Programming Languages: Python and R

Python dominates with libraries like scikit-learn for algorithm implementation. Its readability accelerates collaboration across teams. R excels in statistical analysis, favored in academia for hypothesis testing.

Libraries and Frameworks: TensorFlow vs. PyTorch

TensorFlow powers Google Search rankings, optimized for production scalability. PyTorch offers flexibility for research, enabling dynamic neural networks. Choose based on project needs:

- TensorFlow: Large-scale deployments.

- PyTorch: Rapid experimentation.

Data Processing Tools: Pandas and NumPy

Pandas handles 80% of preprocessing tasks, cleaning tabular data efficiently. NumPy accelerates numerical operations with array-based computations. Together, they simplify feature engineering.

Jupyter Notebooks enhance iterative development, combining code and visualizations. These tools empower teams to use machine learning effectively across industries.

Machine Learning Frameworks

Open-source libraries have transformed how teams build intelligent systems. These frameworks provide pre-built components for developing learning algorithms, reducing coding time by 40-60%. Three dominant solutions power most commercial and research applications today.

TensorFlow

Google’s TensorFlow handles 3M+ monthly downloads, making it the most adopted framework. Its ecosystem includes:

- Keras: Simplifies neural network creation

- TF Lite: Optimizes models for mobile devices

- TF Extended: Manages production pipelines

Major companies like Airbnb use it for recommendation engines. The framework excels in distributed training across GPU clusters.

PyTorch

Preferred by 75% of NLP researchers, PyTorch enables dynamic computation graphs. This allows real-time adjustments during experiments. Key advantages:

- Faster prototyping with Pythonic syntax

- Strong community support for academic projects

- Seamless CUDA integration for GPU acceleration

Facebook’s PyTorch powers cutting-edge computer vision research.

Scikit-learn

For traditional machine learning tasks, Scikit-learn offers 50+ built-in algorithms. Its standardized API simplifies:

- Classification (SVMs, Random Forests)

- Regression (Linear, Polynomial)

- Clustering (K-means, DBSCAN)

Startups favor this library for its minimal setup requirements and comprehensive documentation.

“Framework choice depends on project stage—research favors PyTorch, while production leans toward TensorFlow.”

Performance benchmarks on MNIST dataset show:

| Framework | Accuracy | Training Time |

|---|---|---|

| TensorFlow | 99.2% | 8.7min |

| PyTorch | 98.9% | 7.2min |

| Scikit-learn | 97.1% | 3.1min |

Deployment considerations vary by platform:

- Mobile: TensorFlow Lite with quantized models

- Cloud: Vertex AI or SageMaker integrations

- Edge: ONNX runtime for cross-framework support

Cloud-Based Machine Learning Platforms

Businesses now leverage cloud platforms to scale intelligent systems effortlessly. These solutions eliminate infrastructure headaches while accelerating deployment. Leading providers offer specialized environments for building, training, and deploying models at enterprise scale.

Google Vertex AI

Google’s Vertex AI reduces deployment time by 50% compared to traditional setups. Key features include:

- AutoML: Build models without coding expertise

- Custom training: Supports TensorFlow and PyTorch

- MLOps integration: Streamlines model monitoring

Retailers like Lowe’s use it for inventory forecasting. The platform processes petabytes of data across Google’s global network.

IBM Watson

Watson excels in healthcare with unmatched natural language capabilities. It analyzes 2.5 billion medical documents for diagnostic support. Notable applications:

- Cancer treatment recommendations

- Clinical trial matching

- Medical imaging analysis

“Watson reduced diagnosis time by 30% in oncology cases.”

Microsoft Azure ML

Microsoft’s solution integrates with 100+ Azure services for hybrid deployments. Enterprises benefit from:

- Automated pipelines: From data prep to deployment

- Responsible AI dashboard: Detects model bias

- Edge deployment: Runs models on IoT devices

Security remains paramount across all platforms. Each provider offers:

| Platform | Encryption | Compliance |

|---|---|---|

| Vertex AI | AES-256 | HIPAA, GDPR |

| Watson | FIPS 140-2 | HITRUST |

| Azure ML | Double-layer | FedRAMP |

Pricing models vary significantly:

- Pay-as-you-go: Ideal for experimental projects

- Subscription: Cost-effective for steady workloads

- Enterprise: Custom packages for large teams

Data Preparation and Cleaning

Quality predictions begin with structured, clean datasets. CrowdFlower reveals data scientists spend 60% of their time scrubbing and organizing information. This groundwork directly impacts model performance—flawed inputs guarantee unreliable outputs.

Data Labeling Techniques

Accurate annotations power supervised learning systems. Two approaches dominate:

- Manual labeling: Human experts tag images/text (e.g., medical diagnoses)

- Automated tools: Snorkel generates weak supervision at scale

Hybrid methods balance cost and precision. Tesla’s autopilot team combines both for lane detection.

Handling Missing Data

Gaps in datasets demand strategic solutions:

- Mean/median imputation: Quick fixes for numerical columns

- KNN imputation: Estimates values from similar records

Healthcare studies show KNN improves mortality prediction accuracy by 18% versus deletion.

Feature Engineering

Kaggle contests prove proper feature design boosts accuracy by 30%. Essential techniques include:

- Normalization: Scales values to 0-1 range (e.g., sensor readings)

- PCA: Compresses dimensions while preserving variance

“Feature creativity often outperforms algorithm sophistication.”

Advanced teams generate synthetic data for rare fraud patterns using GANs. This balances class distributions in machine learning pipelines.

Model Training and Evaluation

Building reliable predictive systems requires careful data partitioning and performance measurement. The 80/20 split remains standard practice—80% for training and 20% for validation. This approach prevents overfitting while maintaining sufficient data for robust model development.

Training Data vs. Validation Data

Stratified sampling proves essential for imbalanced datasets. This technique preserves class distribution in both subsets. For medical diagnostics, it ensures rare conditions appear in both training and validation sets.

Early stopping techniques prevent neural networks from memorizing patterns. Monitoring validation loss triggers automatic halting when performance plateaus. TensorFlow’s callback API implements this with three-line code integration.

Metrics for Model Performance

Different scenarios demand specific evaluation criteria:

- Accuracy: Overall prediction correctness

- F1 Score: Balances precision and recall

- ROC-AUC: Measures classification threshold performance

Confusion matrices reveal error patterns visually. They highlight false positives versus false negatives—critical for fraud detection systems.

“No single metric tells the whole story. Smart teams track multiple indicators throughout the learning process.”

Cross-Validation Techniques

K-fold methods outperform single splits by utilizing all data for both training and testing. Five to ten folds provide robust performance estimates without excessive computation.

| Method | Advantage | Use Case |

|---|---|---|

| K-fold | Balanced resource use | Medium datasets |

| Leave-one-out | Maximizes training data | Small samples |

| Stratified | Preserves distributions | Imbalanced classes |

Hyperparameter tuning optimizes model configurations. Grid search tests all combinations systematically, while random search samples efficiently. Cloud platforms accelerate this process through parallel processing.

Proper evaluation ensures learning systems perform reliably in production. These techniques separate academic experiments from business-ready solutions.

Deploying Machine Learning Models

Operationalizing predictive systems demands specialized workflows beyond traditional software. While machine learning development focuses on accuracy, production requires reliability at scale. Gartner reports 85% of AI projects fail during deployment due to infrastructure gaps.

MLOps: Bridging Development and Operations

MLOps reduces deployment time from weeks to hours by automating critical processes. Unlike DevOps, these frameworks handle unique challenges:

- Model versioning: Track iterations with metadata like training data

- Data validation: Ensure production inputs match training distributions

- Reproducibility: Containerized environments guarantee consistent results

Continuous integration pipelines now support learning systems. GitHub Actions can trigger retraining when new labeled data arrives. This maintains model relevance without manual intervention.

Model Serving and Scalability

Kubernetes orchestrates 70% of production deployments, according to CNCF. Auto-scaling strategies address variable workloads:

- TensorFlow Serving: Handles 50,000 requests/second with GPU acceleration

- A/B testing: Routes traffic between model versions for performance comparison

- Canary releases: Gradually shifts users to updated models

“Production systems must detect both sudden data shifts and gradual concept drift.”

Monitoring dashboards track key metrics like latency and prediction confidence. Alerts trigger when values deviate from training baselines, preventing silent failures.

Machine Learning in Business

Industries worldwide now integrate intelligent systems to streamline operations. These business applications transform customer experiences and internal processes alike. From retail to banking, organizations achieve 40% higher efficiency through algorithmic solutions.

Revolutionizing Customer Service

Natural language processing powers 24/7 support channels. Servion predicts chatbots will handle 85% of interactions by 2025. Key implementations include:

- Sentiment analysis: Detects frustration in real-time

- Multilingual support: Instantly translates queries

- Contextual routing: Directs complex issues to human agents

Bank of America’s Erica assistant resolves 50M+ requests annually. This use of machine learning reduces call center volumes by 30%.

Fortifying Fraud Detection

Financial institutions leverage anomaly detection to secure transactions. Advanced models achieve:

- 40% fewer false positives than rule-based systems

- 98% accuracy in identifying stolen credit cards

- Real-time blocking of suspicious activities

Visa’s AI reviews 500 transactions/second, preventing $25B in annual fraud. The system learns from each incident, constantly improving detection patterns.

Personalizing Marketing Strategies

E-commerce platforms drive 35% more sales through recommendation engines. Behavioral analysis enables:

- Dynamic product suggestions based on browsing history

- Personalized email campaigns with optimized send times

- Predictive inventory management for trending items

“Personalization engines deliver 5-8x ROI through increased conversion rates.”

HR departments now use machine learning for talent management. Algorithms screen resumes 20x faster than humans while reducing hiring bias. Workforce analytics also predict attrition risks with 85% accuracy.

Ethical Considerations in Machine Learning

Algorithmic decision-making raises critical ethical questions in modern technology. Systems trained on historical data often perpetuate societal biases, while privacy concerns grow with increased intelligence gathering. This section examines three core challenges facing responsible development.

Addressing Bias in Algorithmic Systems

Amazon’s recruiting tool famously disadvantaged female candidates, reflecting gender biases in training data. Facial recognition shows 35% higher error rates for darker-skinned individuals, per MIT research. Mitigation strategies include:

- Diverse training sets: Ensure representation across demographics

- Bias audits: Regular testing against protected groups

- IBM’s stance: Withdrew general facial recognition over fairness concerns

Protecting User Privacy

Differential privacy adds mathematical noise to datasets, preventing identification of individuals. Federated learning keeps raw data on devices while sharing only model updates. These approaches balance utility with confidentiality in sensitive domains like healthcare.

Navigating Regulatory Landscapes

GDPR mandates explainability for automated decisions affecting EU citizens, with fines exceeding €4B. California’s CCPA grants similar rights with narrower scope. Key differences:

| Regulation | Scope | Penalties |

|---|---|---|

| GDPR | All EU data | 4% global revenue |

| CCPA | CA residents | $7,500 per violation |

“Ethical machine learning requires continuous monitoring, not just compliance checkboxes.”

Responsible development teams implement ethics reviews alongside technical validation. As intelligence systems grow more pervasive, these considerations become business imperatives rather than optional safeguards.

Challenges in Machine Learning

Organizations face significant roadblocks when implementing predictive systems. Gartner reports 45% of projects fail due to unresolved technical hurdles. These obstacles span data pipelines, infrastructure costs, and model transparency.

Data Quality Issues

Enterprise environments often struggle with fragmented information silos. Inconsistent formats and missing values corrupt training datasets. Common problems include:

- Legacy systems storing customer records differently across departments

- Manual entry errors in 19% of healthcare datasets (Journal of AMIA)

- Sensor drift causing inaccurate IoT measurements over time

Domain adaptation poses another hurdle. Models trained on retail data frequently underperform when applied to manufacturing scenarios.

Computational Resource Demands

Training advanced algorithms requires substantial processing power. OpenAI spent $12M developing GPT-3 across thousands of GPUs. Teams must choose between:

| Option | Cost | Flexibility |

|---|---|---|

| Cloud | $5-$15/hour | Instant scaling |

| On-premise | $50k+ upfront | Data control |

Energy consumption also raises concerns. Training one NLP model emits 626,000 lbs of CO₂—equal to 300 cross-country flights.

Interpretability and Explainability

Complex neural networks function as “black boxes,” creating trust issues. SHAP (Shapley Additive Explanations) reveals feature importance through game theory. Key approaches:

- LIME creates simplified local models around predictions

- Decision trees provide inherent transparency for regulated industries

- Saliency maps highlight image regions influencing classifications

“Explainability becomes critical when algorithms impact human lives.”

Financial institutions now require documentation of model logic for compliance audits. This learning transparency builds stakeholder confidence.

Future Trends in Machine Learning

Three seismic shifts will transform intelligent systems by 2030. Breakthroughs in hardware, algorithms, and ethical frameworks are converging to redefine possibilities. This evolution impacts industries from healthcare to climate science.

Advancements in Deep Learning

Transformer architectures now process text, images, and video simultaneously. Google’s Pathways system achieves 86% accuracy on multimodal tasks, outperforming specialized models. Key developments include:

- Neural networks with trillion-parameter scale

- Self-supervised training reducing labeled data needs by 60%

- Neuromorphic chips mimicking biological brain structures

TinyML brings deep learning to microcontrollers under 1MB memory. This enables:

| Application | Impact |

|---|---|

| Wearable health monitors | Real-time arrhythmia detection |

| Smart agriculture | Soil analysis without cloud dependency |

Edge AI and IoT Integration

Decentralized processing addresses latency and privacy concerns. Manufacturing plants now deploy:

- On-device quality control systems with 99.2% defect recognition

- Predictive maintenance models running locally on machinery

“Edge deployment reduces energy use by 40% compared to cloud alternatives.”

Quantum Machine Learning

Qubit-based systems solve optimization problems 1000x faster than classical computers. Research prototypes demonstrate:

- Molecular simulation for drug discovery

- Portfolio optimization in finance

- Traffic routing with quantum neural networks

Ethical debates intensify as AI generates convincing synthetic media. The future demands balanced innovation with:

| Challenge | Solution |

|---|---|

| Deepfake detection | Blockchain watermarking |

| Algorithmic bias | Federated learning audits |

Real-World Applications of Machine Learning

From hospitals to highways, intelligent systems transform daily operations. These applications demonstrate how algorithmic solutions solve complex challenges. Industries achieve measurable improvements in accuracy, efficiency, and safety.

Revolutionizing Healthcare Diagnostics

DeepMind’s AlphaFold mapped 200 million protein structures, accelerating drug discovery. Medical imaging models now detect tumors with 94% accuracy—outperforming human radiologists in breast cancer screenings.

Key advancements include:

- Predictive analytics identifying sepsis 12 hours earlier

- Retinal scans diagnosing diabetic retinopathy in minutes

- Genomic sequencing predicting disease risks from DNA

Powering Autonomous Vehicles

Tesla’s Autopilot processes 1 billion miles of vision data annually. Sensor fusion combines cameras, radar, and ultrasonics for real-time decision making. These learning systems navigate complex scenarios:

| Challenge | Solution |

|---|---|

| Pedestrian detection | 3D object recognition |

| Adverse weather | Neural net compensation |

“Autonomous driving reduces accidents by 40% through predictive braking.”

Advancing Natural Language Processing

GPT-4 handles 26 languages fluently, enabling global communication. Customer service bots resolve 70% of inquiries without human intervention. Breakthrough applications include:

- Real-time translation for international conferences

- Automated legal document analysis

- Accessibility tools for speech-impaired users

Manufacturing benefits through predictive maintenance sensors. Agricultural drones optimize crop yields using computer vision. These implementations prove the versatility of intelligent models across sectors.

Conclusion

Algorithmic innovation reshapes industries with unprecedented precision. From healthcare diagnostics to fraud detection, machine learning drives measurable improvements in accuracy and efficiency.

Success hinges on two pillars: high-quality datasets and robust tools. Ethical implementation ensures fairness, while AutoML platforms democratize access for broader adoption.

The future demands continuous adaptation. As algorithms evolve, staying informed ensures competitive advantage. Embrace this dynamic landscape to unlock transformative potential responsibly.

FAQ

How does machine learning differ from artificial intelligence?

Artificial intelligence (AI) is a broad field focused on creating systems that mimic human intelligence. Machine learning is a subset of AI that trains models to recognize patterns in data without explicit programming.

What are the most common machine learning algorithms?

Popular algorithms include supervised learning (e.g., linear regression, decision trees), unsupervised learning (e.g., k-means clustering), and reinforcement learning (e.g., Q-learning).

Which programming languages are best for machine learning?

Python and R dominate the field due to rich libraries like TensorFlow, PyTorch, and scikit-learn. Python is preferred for its simplicity and extensive community support.

What tools are essential for data preparation in machine learning?

Pandas and NumPy handle data manipulation, while tools like OpenRefine clean datasets. Feature engineering often relies on scikit-learn for preprocessing.

How do neural networks improve deep learning models?

Neural networks, especially deep architectures like CNNs and RNNs, excel at processing complex data (images, text) through layered representations, boosting accuracy in tasks like image recognition.

What are the key challenges in deploying machine learning models?

Scalability, model drift, and integration with existing systems pose hurdles. MLOps tools like Kubeflow streamline deployment and monitoring in production environments.

Can machine learning models be biased?

Yes, biases in training data can lead to unfair outcomes. Techniques like fairness-aware algorithms and diverse dataset curation help mitigate these risks.

What industries benefit most from machine learning?

Healthcare (diagnostics), finance (fraud detection), and retail (personalized recommendations) leverage ML heavily. Autonomous vehicles and NLP applications also drive innovation.

How does reinforcement learning work?

Agents learn by receiving rewards or penalties for actions in an environment. Applications range from game AI (AlphaGo) to robotics and supply chain optimization.

What’s the future of machine learning?

Trends include edge AI for real-time processing, quantum ML for solving complex problems, and tighter IoT integration for smart systems.